A Brief(ish) History of the Web Universe - Part I: The Pre-Web

There are a couple of posts that I've been wanting to write, but in each of them I keep finding myself wanting to talk about historical context. Explaining it in place turns out to be too hard and I've been unable to find a resource that unifies that bits I want to talk about. I've decided, then, that it might be easier then to write this history separately, mostly so that I can refer back to it. So here it is, "A Brief(ish) History of the Web Universe" aka "The Boring Posts" in a few parts. No themes, no punch, just history that I hope I can use to help explain where my own perspectives on a whole bunch of things draw from...

Background

Businesses, knowledge, government and correspondence were, for literally hundreds of years, built on paper documents. How did we get from that world to this? And how has that world, and our path, helped shape this one? I'm particularly interested in the question of whether some of those implications are good or bad - what we can learn from the past in order to improve on our future or understand our present. So how did we get here from there?

Arguably the first important step was industrialization. This changed the game in transforming the size of challenges and created new needs for efficiency. This gave rise to the need for increasing agreement beginning with standards around physical manufacture - first locally, and then nationally around 1916. World War II placed intense pressures and funded huge research budgets and international cooperation. A whole lot of important things shook out in the 1940s and each developed kind of independently. I won't go into them much here except to note a few key points to help set the mental stage of what the world was like going into the story.

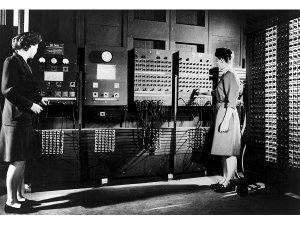

The word "computer" in anything resembling really modern terms wasn't even a thing until 1946.

In 1947 ISO, the International Standards Organization, was founded. That same year, the transistor was invented at Bell Labs. In the late 1940's the Berlin Airlift transported over 2.3 million tons of food, fuel and supplies by developing a "standard form" document could be transmitted over just about any medium - including, for example, by telegraph. Later this basic technique would become "EDI" (Electronic Data Interchange) and become the standard for commercial shipping and trade at scale, but It required very tight agreement and coordination on standard business documents and processes.

Transistors revolutionized things, but the silicone chips which really began the revolution weren't yet a thing. Intel, who pioneered and popularized it wouldn't even be founded until 1968.

During this interim few decades, the number of people exposed to the idea of computers began, very slowly to to expand - and that gets pretty interesting because we start to see some interesting forks in the road...

1960's Interchange, SGML and HyperStuff

In the mid 1960's Ted Nelson noted the flaw with the historical paper model:

Systems of paper have grave limitations for either organizing or presenting ideas. A book is never perfectly suited to the reader; one reader is bored, another confused by the same pages. No system of paper-- book or programmed text-- can adapt very far to the interests or needs of a particular reader or student.

He imagined a very new possibility with computers, first in scholarly papers and then in books. He imagined this as an aid to authors as well which he explained evolve a work from random notes to outlines to advanced works. He had a big vision. In his description, he coined three important terms: Hypertext, Hypermedia (originally Hyperfilm) and Hyperlink. For years, the terms Hypertext and Hypermedia would cause some problems. Some (including it seems to me Nelson) considered media as part of the text because it was serialized and others considered text as a subset of media) -- But this was all way ahead of its time. While it was going down, the price-point and capabilities just weren't really there. As he described in the same paper.

The costs are now down considerably. A small computer with mass memory and video-type display now costs $37,000;

Another big aspect of his idea was unifying some underlying systems about files. Early computers just didn't agree on anything. There weren't standard chipsets much less standard file types, programs, protocols, etc.

Markup

In 1969, in this early world of incompatibility, three men at IBM (Goldfarb, Mosher and Lorie) worked on the idea of using markup documents to allow machines to trade and deal with a simple understanding of "documents" upon which they could specialize understanding, storage, retrieval or processing. It was called GML after their initials, but later "Generalized Markup Language". It wasn't especially efficient. It had nothing to do with HyperAnything nor even EDI in a technical sense. But it was comparatively easy to get enough rough agreement in order and flexible enough to make things actually work in order to achieve real things. For example, you could send a GML document to a printer and define separately how precisely it would print. Here's what it looked like:

:h1.Chapter 1: Introduction :p.GML supported hierarchical containers, such as :ol :li.Ordered lists (like this one), :li.Unordered lists, and :li.Definition lists :eol. as well as simple structures. :p.Markup minimization (later generalized and formalized in SGML), allowed the end-tags to be omitted for the "h1" and "p" elements.

But GML was actually a script - the tags indicated macros which could be implemented differently. Over time, GML would inspire a lot, get pretty complicated, philosophical about declarative nature and eventually become SGML (Standard Generalized Markup Language). This would continue growing in popularity - especially in the print industry.

The changing landscape

For the next decade, computers got faster, cheaper, and smaller and more popular in business, science and academia and these all matured and evolved on their own.

Networks were arising too and the need for standardization there seemed obvious. For about 10 years there was an attempt to create a standard network stack in international committees, but it was cumbersome, complex and not really getting there. Then, late in this process, Vint Cerf left the committee. He led work focused on rough consensus and running code for the protocol and, in very short order, the basic Internet was born.

Around this same time, a hypertext system based on Nelson's ideas, called "Guide" was created at Carnegie Mellon University for Unix workstations.

Rise of the Computers

In the 1980's Macs and PCs, while still expensive, were finally becoming affordable enough that some regular people could hope to purchase them.

Return of the HyperStuff

Guide was commercially sold by, ported by to the Mac and PC by, and later very much improved on by a company called OWL (Office Workstations Limited) led by a man named Ian Ritchie. It introduced one of the first real hypertext systems to desktops in 1985. In 1986 Guide won a British Computer Society award. Remember "Guide" and "Ian Ritchie" because they're going to come back up.

Another two years later people were really starting to take notice of "HyperText" (and beginning to get a little over-generalized with the term - frequently this was really becoming "HyperMedia"). In 1987, an application called "HyperCard" was introduced and the authors convinced Apple to give it away free for all Mac users. It was kind of a game changer.

Another two years later people were really starting to take notice of "HyperText" (and beginning to get a little over-generalized with the term - frequently this was really becoming "HyperMedia"). In 1987, an application called "HyperCard" was introduced and the authors convinced Apple to give it away free for all Mac users. It was kind of a game changer.

HyperCard was a lot like Guide in many ways but with a few important details we'll come back to. The world of HyperCards was built of "decks" - each deck a stack of "cards" full of GUI: forms, animations, information and interactions which could be linked together and scripted to do all sorts of useful things. Cards had an innate "order" and could link to and play other media which would - the state of things in a card/deck was achieved through scripting. They were bitmap based in presentation and cleverly scalable.

Screenshot of VideoWorks and the "score"

Screenshot of VideoWorks and the "score"

That same year, in 1987, a product called VideoWorks by a company named Macromind was released. In fact, if you got a Mac, you saw it because it was used to create the interactive guided tour that shipped with it. You could purchase it for your own authorship.

One interesting aspect of VideoWorks was its emphasis on time. Time is kind of an important quality of non-static media, so if you're interested in the superset of hypermedia, you need the concept of time. Thus, the makers of VideoWorks included a timeline upon which things were 'scored'. With this HyperMedia, authors could allow a user to move back and forth in the score at will. This was kind of slick, it made sense to a lot of people and it caught on. Their product later became "Director" and it became a staple for producing high-end, wow'ing multimedia content on the desktop, CD-ROMs for kiosks and so on.

By the late 1980's, OWL's Guide had really come to focus on the PC version. Hypercard was Free on the Mac and as it's creator Ian Ritchie would say later...

You can compete on a lot of things, but it's hard to compete with free...

The emergence of an increasingly fuzzy line...

Note that most of these existing systems of this time actually dealt, in a way, with hypermedia in the sense that they didn't draw so closely to this fundamental primitive idea based on paper. All of these could be scripted and and you might imagine: Applications were a natural outcropping. The smash game Myst was actually just a really nice and super advanced HyperCard stack!

The Stage is Set...

The Internet was there. It was available - in use even, but it was pretty basic and kind of cumbersome. Many people who used the internet perhaps didn't even realize what the internet really was - they were doing so through an online service like Compuserve or Prodigy.

But once again, as new doors were opened, some saw new promise.

The Early 1990s

I've already mentioned that SGML had really nothing at first to do with HyperMedia, but here's where it comes back in. The mindset around HyperCard was stacks and cards. The mindset around VideoWorks/Director was Video. The mindset of OWL was documents. SGML was a mature thing and having something to do with SGML was kind of a business advantage.

Guide had SGML-based documents. More than that, it called these "Hypertext Documents" and their SGML definition was called "HyperText Markup Language" (which they abbreviated HML) and it could deliver them over networks. Wow. Just WOW, right? Have you even heard the term "Web" yet? No.

But wait - there's more! Looking at what else was going on, OWL had much advanced Guide on the PC and by now it had integrated sound and video and advanced scripting ability too. While it was "based" on documents, it was so much more. What's more, while all of this was possible, it was hidden from the average author - it had a really nice GUI that allowed both creation and use. That it was SGML underneath was, to many, a minor point or even a mystery.

Web Conception

This is the world into which HTML was conceived. I say conceived rather than "born" or "built" because Tim had been working out his idea for a few years and refining it. He talked about it to anyone who would listen about a global, decentralized, read-write system that would really change the Internet. He had worked out the idea of an identifier, a markup language and a protocol but that was about it.

And here's the really interesting bit: He didn't really want to develop it, he wanted someone else to. As Tim has explained it several times...

There were several commercial hypertext editors and I thought we could just add some internet code, so that the hypertext documents could then be sent over the internet. I thought the companies engaged in the then fringe field of hypertext would immediately grasp the possibilities of the web.

Screenshot of OWL's Guide in 1990

Screenshot of OWL's Guide in 1990

Remember OWL and Guide? Tim thought they would be the perfect vehicle, they were his first choice. So, in November 1990, when Ian Ritchie took to a trade show in Versaille to show off Guide's HyperMedia, Tim approached him and tried hard to convince him to make OWL the browser of the Web. However, as Tim notes "Unfortunately, their reaction was quite the opposite..."

Note that nearly all of the applications discussed thus far, including OWL, were commercial endeavors. In 1986, authors who wanted to use OWL to publish bought a license for about $500 and then viewers licensed readers for about $100. To keep this in perspective, in adjusted dollars this is roughly $1,063 for writers and $204 for readers. This is just how software was done, but it's kind of different from the open source ethos of the Internet. A lot of people initially assumed that making browsers would be a profitable endeavor. In fact, later Mark Andreeson would make a fortune on the name Netscape in part because there was an assumption that people would buy it. It was, after all, a killer app.

It's pretty interesting to imagine where we would be now if Ritchie had been able to see it. What would it have been like with OWL's capabilities as a starting point, and what impact the commercial nature of the product might have had on the Web's history and ability to catch on. Hard to say.

However, with this rejection (and other's like it) , Tim realized

...it seemed that explaining the vision of the web was exceedingly difficult without a web browser in hand, people had to be able to grasp the web in full, which meant imagining a whole world populated with websites and browsers. It was a lot to ask.

He was going to have to build something in order to convince people. Indeed, Ian Ritchie would later give a Ted Talk about this in which he admits that two years later when he saw Mosaic he realized "yep, that's it" - he'd missed it.

A Final Sidebar...

PenPoint Tablet in 1991

PenPoint Tablet in 1991

At very nearly the same time something that was neither HyperStuff nor SGML nor Internet related entered the picture. It was called "PenPoint". Chances are pretty good that you've never heard of it and it's probably going to be hard to see how, but it will play importantly into the story later. PenPoint was, in 1991, a tablet computer with a stylus and gesture support and vector graphics.

Think about what you just read for a a moment and let it sink in.

If you've never seen PenPoint, you should check out this video from 1991 because it's kind of amazing. And here's what it has to do with our story: It failed. It was awesomely ahead of its time and it just... failed. But not before an application was developed for it called "SmartSketch FutureSplash" (remember the name) - a vector based drawing tool which would have been kind of sweet for that device in 1991.

I explain in Part II: Time how this plays very importantly into the story.

Many thanks to folks who slogged through and proofread this dry post for me: @mattur @simonstl and @sundress.

The First Digital Computer - ENIAC "For a decade, until a 1955 lightning strike, ENIAC may have run more calculations than all mankind had done up to that point." from computerhistory.org

The First Digital Computer - ENIAC "For a decade, until a 1955 lightning strike, ENIAC may have run more calculations than all mankind had done up to that point." from computerhistory.org