Listen Up:

The Web Speech APIS Part Deux

Speech Recognition

- Long history, lots of fails trying to standardize

- Windy path, but we wound up with

(buggy/imperfect) TTS support in all modern

browsers -

...it isn't a standard, it's from an unofficial

draft that contained stuff about voice

recognition too...

Not nearly as widely implemented...

Constructor...

let voiceRecognition = new webkitSpeechRecognition()It's not listening.

and you don't know if it ever will.

voiceRecognition.start()

The draft says before .start()'ing it needs to check...

do you have permission?

A brief(-ish) sidebar on User-Agent/powerful features

Standards and browsers are very sensitive to this.

Watch it, Fokker...

Tough problem.

YMMV.

Most of this is specified in only very lose terms.

Sites have to ask for permission

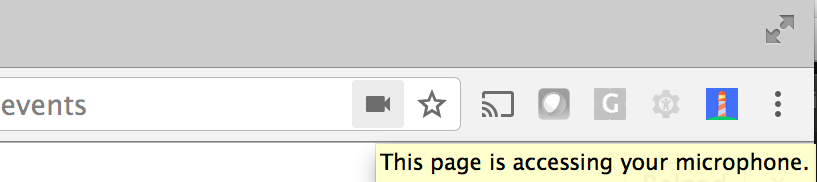

This is not listening (or watching). It has permission to...

(we'll come back to the distinction)

You have to be able to revoke/manage that easily.

In fact, you have to be able to revoke/manage all permissions easily.

</sidebar>

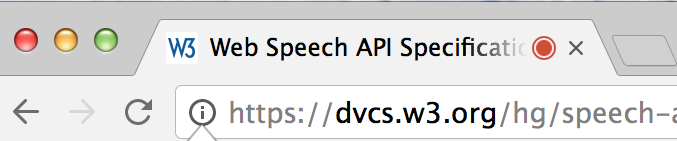

The draft says that the browser has to tell you

when it's actually listening

Now it's listening

Assuming you have permission, after

.start() it is listening.

Now what?

The voiceRecognition object pumps lots of events.

These three are the really useful ones...

voiceRecognition.onstart = (evt) => {

// Congratulations, you have permission.

}draft: "Fired when the recognition service has begun to listen to the audio with the intention of recognizing"

start

voiceRecognition.onerror = (evt) => {

// What could possibly go wrong?

}

draft: "Fired when a speech recognition error occurs"

error

voiceRecognition.result = (evt) => {

// YAY!!!

}

draft: "Fired when the speech recognizer returns a result"

result

Ok... wow... That's a lot simpler than I expected.

So.. how do I get the text transcript

of the sound? Is it the event?

voiceRecognition.onresult = ( evt ) => {

console.log(`

here's what I heard:

${evt.results[0][0].transcript}

`)

}

evt.results[0][0].transcriptWait...

.results is a multi-dimensional array?

lol, not even...

They're Array-likes.

The good news is, for most use cases,

there will only be one in each collection,

and you want the 0'th one.

Here's why the first one is an array...

// by default, the .continous

// property is false.

// Once a result is received,

// it stops listening... But...

voiceRecognition.continuous = true;

voiceRecognition.onresult = (evt) => {

// Now it doesn't stop automatically

// and evt.results.length is one

// greater with each new recognition

}Implementations buggy, possibly contentious.

"You just can restart it again."

♬ ding

You: It was the best of times, it was the worst of times, bleep ♬

it was ♬ ding the age of wisdom, it was the age of foolishness, bleep ♬

it was the ♬ ding epoch of belief, it was the epoch of incredulity bleep ♬

it was the season of ♬ ding Light, it was the season of Darkness

Pesky permissions on mobile tho...

♬ ding

You: It was the best of times, it was the worst of times, bleep ♬

{computer speaks 'I heard it was the best of times it was the worst of times while you are saying

it was the age of wisdom, it was the age of foolishness}

♬ ding

{computer speaks 'I heard I heard it was the age of best of wisdom at times of foolishness' while you are

saying the next bit... even if you stop talking.. }

bleep ♬

{potentially infinite ♬ ding bleep ♬ loop of 'I heards' hearing 'itself'}

Add an 'I heard' confirmation for extra lolz and talk over each other..

let speechRecognitionResult = evt.results[0]Also not an Array, also by default has exactly 1 item.

Ok... So there's really just one 'result'

voiceRecognition.maxAlternatives = 10

voiceRecognition.onresult = (evt) => {

let alternatives = evt.results[0]

//...

}

alternatives.length can be 1...10.

Each of these also has a

.confidence attribute (float 0...1)

Let's illustrate all this with a game.

Each question will show you something and ask you to describe it...

<div id="q-1">

<div class="prompt">...</div>

<img src="something">

<button class="listen">...listen...</button>

<pre class="out">...output here...</pre>

</div>

All but the last will use a pattern like this to listen and output...

let question = document.querySelector('#q-5'),

outputEl = question.querySelector('.out'),

listenBtn = question.querySelector('.listen'),

recog0 = new webkitSpeechRecognition()

recog0.onresult = (evt) => {

outputEl.innerHTML =

`I heard you say:

${evt.results[0][0].transcript}`

}

recog0.onerror = (evt) => {

alert('whoops')

}

listenBtn.addEventListener('click', () => {

recog0.start()

})

In the last one we'll use .maxAlternatives...

let question = document.querySelector('#q-1'),

outputEl = question.querySelector('.out'),

listenBtn = question.querySelector('.listen'),

recog0 = new webkitSpeechRecognition(),

dumpAlternatives = (results) => { /* loop, concat, return a string */ }

recog0.onresult = (evt) => {

outputEl.innerHTML =

`I heard you say:

${dumpAlternatives(evt.results)}`

}

recog0.onerror = (evt) => {

alert('whoops')

}

listenBtn.addEventListener('click', () => {

recog0.start()

})

Say hello to my friend Bruce Lawson...

Best guess translation will appear here

Best guess translation will appear here

Best guess translation will appear here

Best guess translation will appear here

Bruce is wearing a ________

I'll tell you what I heard here

Fill in the blanks...

The _____ Lama and Bishop Desmond _____?

The dolly Lama and Bishop Desmond 22?

If we listen for just those two values...

Context matters.

Confidence/Quality are a little arbitrary.

If I read "This is a photo of the Dali Lama and Bishop Desmond Tutu" there's more context that can be used

... if the service can do that

So, that's why alternatives... It's one way you can maybe deal with this problem of ambiguity.

-

.results is a SpeechRecognitionResultList

-

It's not an Array

-

It probably contains exactly 1 item

-

That item is a SpeechRecognitionResult

-

It's not an Array either

-

Unless you set maxResults, it will contain exactly 1 item

-

That item is a SpeechRecogntionAlternative

-

It has a .transcript property and a .confidence property

To Recap...

There's lots more on my blog on the native API and it's kinks and thoughts on how we might improve that.