Brian Specific Failures

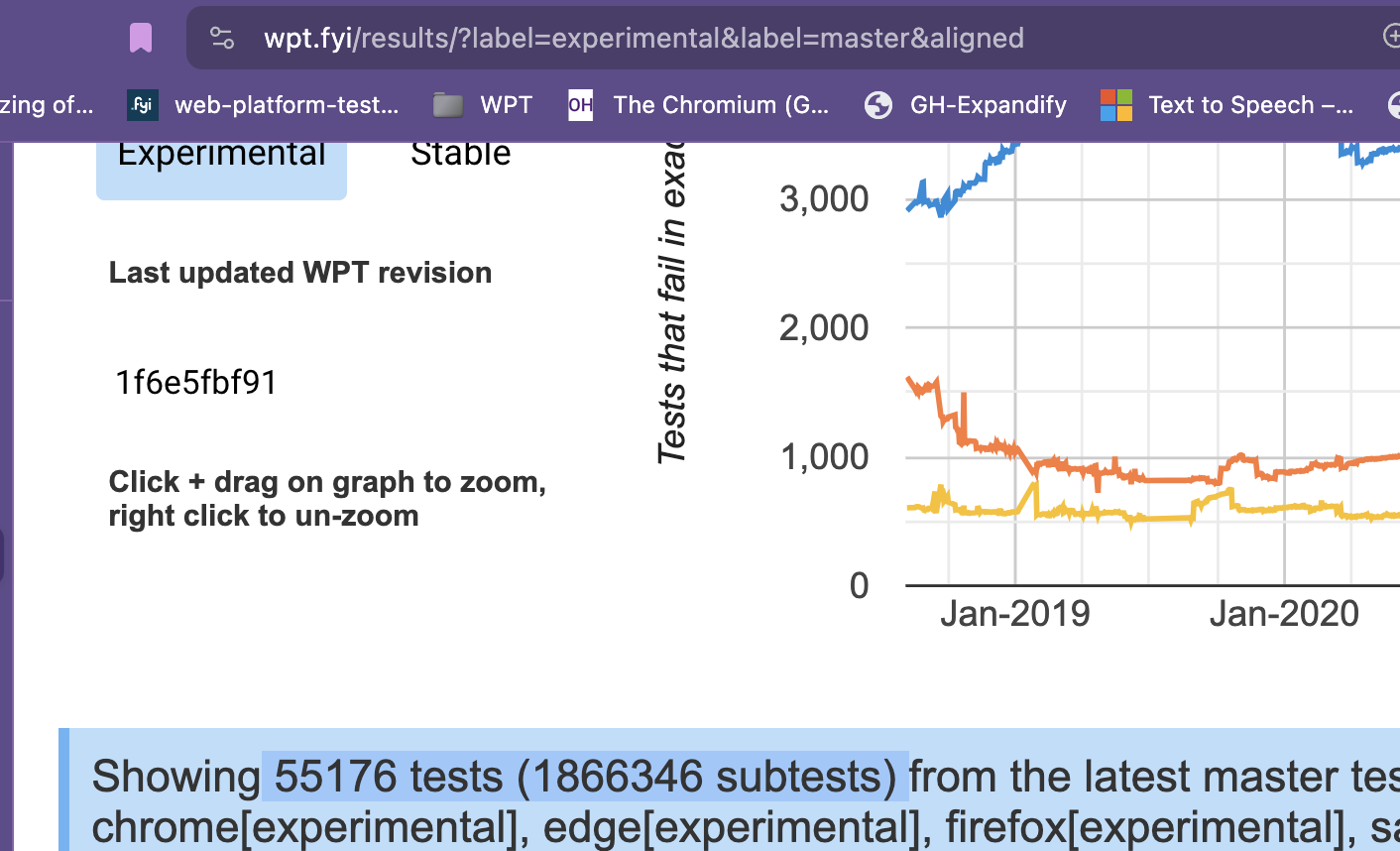

Through my work in Interop, I've been I've been learning stuff about WPT. Part of this has been driven by a desire to really understand the graph on the main page about browser specific failures (like: which tests?). You might think this is stuff I'd already know, but I didn't. So, I thought I'd share some of the things I learned along the way, just incase you didn't know either.

Did you know that wpt.fyi has lots of ways to query it? It does!

You can, for example, type nameOfBrowser: fail (or nameOfBrowser: pass) into the search box - and you can even string together several of them to ask interesting questions!

What tests fail in every browser?

It seemed like an interesting question: Are there tests that fail in every single browser?

A query for that might look like: chrome:fail firefox:fail edge:fail safari:fail (using the browsers that are part of Interop, for example). And, as of this writing, it tells me...

There are 2,724 tests (15,718 subtests) which fail in every single browser!

Wow. I didn't expect there to be zero, but that feels like kind of a lot of tests to fail in every browser, doesn't it? How can that even happen? To be totally honest, I don't know!

A chicken and egg problem?

The purpose of WPT is to test conformance to a standard, but this is... tricky. Tests can be used to discuss specs as they are being written - or highlight things that are yet to be implemented, and WPT doesn't have the same kind of guardrails as specs. Things can get committed there that aren't "real" or yet agreed upon. Frequently the first implementers and experimenters write tests. And, it's not uncommon that then things change - or sometimes the specs just never go anywhere. Maybe those tests sit in a limbo "hoping" to advance?

WPT asks that files of early things like this be placed in a /tentative directory or have a .tentative in the names of early things like that which don't have agreement.

Luckily thanks to the query API we can see which of the tests above fall into that category by adding is:tentative to our query: chrome:fail firefox:fail edge:fail safari:fail is:tentative). We can see that indeed

348 of the tests (1,911 subtests) that fail in every single browser are marked as tentative.

The query language supports negating parameters with an exclamation, so we can adjust the query (chrome:fail firefox:fail edge:fail safari:fail !is:tentative) and see...

So, I guess .tentative doesn't explain most of it (or maybe some of those just didn't follow that guidance). What does? I don't know!

Poking around, I see there's even 1 ACID test that literally everyone fails. Maybe that's just a bad test at this point? Maybe all of the tests that match this query need reviewed? I don't know!

You can also query simply all(status:fail) which will show pretty much the same thing for whatever browsers you happen to be showing. I like the explicit version for introducing this though as it's very clear from the query itself which "all" I'm referring to about.

Are there any tests that only pass in one browser?

I also wondered: Hmm... If there are tests that fail in exactly one browser, and we've just shown there's a bunch that pass in none, how many are there that pass in exactly one? That's pretty interesting to look at too:

- 5,037 tests (36,446 subtests) which pass in Chrome, but not in WebKit or Firefox,

- 1,769 tests (14,273 subtests) which pass only in Firefox

- 545 tests (3,389 subtests) which pass only in Safari(https://wpt.fyi/results/?label=master&label=experimental&aligned&q=chrome%3Afail%20safari%3Apass%20firefox%3Afail)

Engines and Browsers: Tricky

Often when we're showing results we're using the 3 flagship browsers as a proxy for engine capabilies. We do that a lot - like in the Browsers Specific Failures (BSF) graph at the top of wpt.fyi - but... It's an imperfect proxy.

For example, there are also tests that only fail in Edge. As of this writing 1,456 tests (1,727 subtests) of them.

Or, there's also tests that fail uniquely in WebKit GTK - 1,459 tests (1,740 subtests) of those.

Here's where there's a bit of a catch-22 though. BSF is really useful for the browser teams, so links like the above are actually very handy if you're on the Edge or GTK teams. But we can't add those to the BSF chart because it kind of really changes the meaning of the whole thing. What that kind of wants to be is "Engine Specific Failures", but that's not actually directly measurable.

Brian Specific Failures...

Below is an interesting table which links to query results that show actual tests that report as failing in only one of the three flagship browsers.

| Browser | Non-tentative Subtests which uniquely fail among these browsers |

|---|---|

| Chrome | 1,345 tests (12,091 subtests) |

| Firefox | 2,290 tests (16,791 subtests) |

| Safari | 4,263 tests (14,867 subtests) |

If that sounds like the data for the Browser Specific Failures (BSF) graph on the front page of wpt.fyi, yes. And no. I call this the "Brian Specific Failures" (BSF) table.

I think that this is about as close as we're probably going to get in the short term to a linkable set of tests that fail, if you'd like to explore them. The BSF graph is also, I believe, more disciminating than just "pass" and "fail" that we're showing here. Like, what do you do if a test times out? Are there intermediate or currently unknown statuses?

It was also kind of interesting, for me at least, while looking at the data, to realize just how different the situation looks depending on whether you are looking at tests, or subtests. Firefox fails the most subtests, but Safari fails the most tests. BSF "scores" subtests as decimal values of a whole test.

It's pretty complicated to pull any of this off actually. Things go wrong with tests and runs, and so on. I also realized that all of these scores are inherently a little imperfect.

For example, if you at the top of the page, it says (as I am writing this) there are 55,176 tests (1,866,346 subtests)

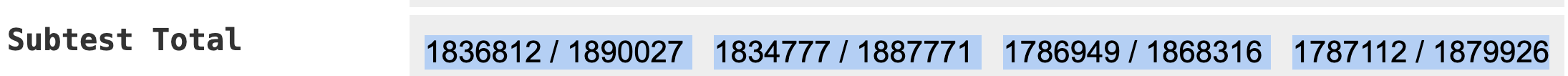

But if you look to the bottom of the screen, the table has a summary with the passing/total subtests for each browser. As I am writing this it says:

You might note that none of those lists the same number of subtests!

Anyway, it was an interesting rabbit hole to dive into! I have further thoughts on the WPT.fyi dashboard page which I'll share in another post, but I'd love to hear of any interesting queries that you come up with or questions you have. I probably don't know the answers, but I'll try to help find them!